A Gentle Introduction to Machine Learning for Games

Hello! If you're reading this, you probably have some combined interest in machine learning and games. Maybe you've seen the results produced by research teams and would like to incorporate such technology into your own game. Or perhaps you are just curious what machine learning is all about. If this describes you, great! You've come to the right place.

In this post, I'm going to give an overview of how I go about using machine learning to develop AI for my games. I'll be making references to SmartEngine, a game-centric machine learning library I've been developing, but the concepts apply to any framework you use. This article is intended for developers, but there is some general knowledge that can be useful to all disciplines.

Machine learning is an exciting and rapidly growing field with uses across all industries. Within the game industry, there are many use cases. The most obvious (and the one I'll be discussing here) relates to controlling non-player characters. Less obvious and more advanced use cases include content generation. For example, generating a base character model from a photograph of a person, blocking out a level from a hand-drawn sketch, or generating natural looking animations. These are great topics for another post. For now, let's focus on non-player characters.

|

| SmartEngine used to control characters in capture the flag demo |

So, what do I mean by machine learning and how is it different from AI you typically see in games? Traditional AI in games involve directly hardcoding behavior by writing some form of state machine. The AI generated this way is very limited. It is generally simple, predictable, and only as smart as the programmer who developed it. In short, boring. On the flip side, with machine learning, the idea is that your characters learn or are taught how to behave instead of being explicitly told what to do. Instead of directly hardcoding behavior, you set high level goals and the AI figures out the behavior necessary to meet those goals. This leads to emergent behavior - the AI acts in a way that can be surprising, clever, and challenging to the player. In short, exciting. Most machine learning today is accomplished using neural networks. By using neural networks, you'll produce AI that has natural, human-like tendencies. This includes both extremely intelligent behavior and the occasional goofy behavior. A neural network based AI was developed by researchers at Google's DeepMind to play StarCraft II and it performed so well, it beat even the best pro players! It ended up surprising opponents by using strategies not seen before in competitive play.

You might have heard of neural networks before. If you are a computer scientist, you may even taken a grad level course on neural networks. I heard about them many years before really understanding what they are about. Knowing very little, they seemed daunting and complex. But after jumping in, I discovered they are pretty intuitive and simple in nature, and magical at the same time. I wish I had discovered them earlier in my career.

At a high level, neural networks try to mimic the structure of the brain. Layers of "neurons" are interconnected with each other. Each layer takes in inputs, either given to it by the user or taken from the previous layer, applies a transformation on the inputs, and produces an output. The transformation is typically a multiplication by some weighted values, an addition (called a bias), followed by an activation function.

|

|

Basic structure of a neural network |

The weights in the picture above give the network its intelligence. They are initially assigned a random value and through training, are massaged to values that give us appropriate output. The activation is a small function that is applied at the end to either bound the output or promote a certain range of values. SmartEngine allows for a dozen of the most common ones, including sigmoid, tanh, relu, and selu. The activation is optional. Most of the time you want one, but there are certain cases where one shouldn't be used. If activation functions are confusing you, don't worry - for now, just know that they exist.

The inputs and outputs in the figure above are what you as the developer define. They are always float values, but you have flexibility over how many and what they represent. The beauty of neural networks is that they can generally map any input to any output, if such a mapping exists. The only caveat is that the number you choose and the "meaning" of the input / output must be fixed for each network.

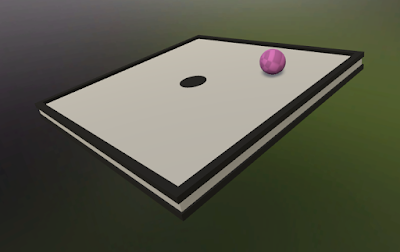

Let's continue forward with an example. I'll take a simplified version of the first project in the SmartEngine Unity samples. In that example, there is a platform that wants to balance a ball. (The example goes one step further with the platform trying to bring the ball to rest at the exact center as quickly as possible, but you can ignore that bit for now if you choose.) For this example, I might have inputs (ball X position, ball Y position, ball X velocity, ball Y velocity) and outputs (torque X, torque Y). To use the network, each frame I feed in the current input values of the ball, execute the network, and apply the output torque to the platform. Physics handles the actual simulation of the platform and ball. Where did those inputs / outputs come from? You need to use a little critical thinking and imagine what pieces of information would be useful to you if you were to control the platform. You'd see the ball's position and velocity, and would need to control the rotation of the platform.

|

| "Balance Ball" SmartEngine Unity example project |

To start using this neural network, we need to first create it in the form of a graph. SmartEngine is designed to abstract away complex functionality into easy-to-use parts, while also giving you control to design and use the graph in the way you want. We could implement the picture above by stringing together multiply and addition nodes, but SmartEngine provides a neuron layer node that handles this for you. Additionally, we could create the graph structure in C++ (or C# in Unity), but it's easier to define it in json and let the engine generate the graph. In a future version, this will be made even easier via a node based UI.

Here is what the JSON might look like for our ball balancing platform graph:

{

"Name": "Platform Graph",

"Nodes": [

{

"Name": "Input",

"Type": "BufferInput",

"Parameters": {

"Dimension": 4

}

},

{

"Name": "Layer1",

"Type": "NeuronLayer",

"Parameters": {

"Input": "Input",

"Type": "Linear",

"ActivationType": "Selu",

"NeuronCount": 32

}

},

{

"Name": "Layer2",

"Type": "NeuronLayer",

"Parameters": {

"Input": "Layer1",

"Type": "Linear",

"ActivationType": "Selu",

"NeuronCount": 16

}

},

{

"Name": "OutputLayer",

"Type": "NeuronLayer",

"Parameters": {

"Input": "Layer2",

"Type": "Linear",

"ActivationType": "None",

"NeuronCount": 2

}

}

]

}

Let's dissect this a bit. First we have the name of the graph, "Platform Graph". Nothing fancy here. Following that is a list of nodes in the graph. The first is of type "BufferInput". This nodes allows us to input values into the graph. The dimension is set to 4 because we have 4 inputs (2 position, 2 velocity). That is fed into a series of neuron layers. The first has 32 neurons internally and an activation of Selu. That feeds into a layer of 16 neurons and finally to an output layer with 2 neurons. Those 2 neurons are our 2 output torque values (X & Y).

You may look at this and see a lot of "magic" values. Why did I choose 3 neuron layers? Why the chosen number of neurons in each layer? Why the chosen activations? I'll let you in on my thought process.

I typically start with 3 layers for all things. I find that it gives enough complexity to do interesting things without being so complex that it has many "edge cases" where things go awry. Two layers would also be a good place to start. "Edge case gone awry" here could mean "when the ball is at this one particular place on the platform, the platform flings it off into space". The number of neurons is also a bit arbitrary, but I usually start with between 24-48. I like to think of the number of layers as how complex a function the network can map (or how complex our behavior) and the number of neurons as the capacity for unique input values. I also reduce the neuron count gradually between the input and output layers. The only thing fixed is the number of neurons in the output layer. That layer must always have a number of neurons equal to the number of outputs we want.

For this example, we could have chosen from a handful of activations with little impact on performance. I like to start with Selu and change from there, but I encourage you to experiment with the different activations. The output layer has no activation. The idea is that we don't want to influence the output of the final transformation. An alternative could be to use Tanh, which is symmetric around 0 and bounds the output between [-1, 1]. We would then multiply by some factor when applying the torque to the physics engine. Selu would not be a good choice because it handles negative numbers differently than positive.

Okay, so we have a graph defined. If we were to instantiate it now and feed it to the physics engine, we'd be pretty disappointed with the results. We would see the platform completely fail, turning in a way that might even fling the ball into space or simply letting it fall off. That is because the weights of the neuron layers in the graph have been initialized with random values and so the graph gives more or less random output. In order for the neural network to produce results we want, we must first train it.

Training is a huge topic of discussion, so I'm going to gloss over the details and just give a high level overview of the process. SmartEngine provides three different methods of training: gradient descent, reinforcement learning, and genetic algorithms. Gradient descent is great when you exactly how the inputs should map to the outputs. The network will quickly learn the mapping and be able to generalize that mapping to values not yet seen. Reinforcement learning is a great tool when you don't know the exact output, but can instead give a reward for good behavior. For instance, we could give the network a small reward for each frame the ball is on the platform. The trainer will adjust the network to maximize this reward. The final method is the use of a genetic algorithm. This is great when the decision of performance is more complicated than a simple reward system, but work perfectly well with a simple scoring system. The genetic algorithm can be used both when you know the output and when you don't. It scales well to multiple machines and is the only training method that is gradient free. Overall, it's my favorite training method and is the one we'll be talking about here.

A genetic algorithm works by creating many variations of a graph (called chromosomes and can be anywhere from 15 to 300+) and runs them through a set of scenarios or tasks. You give a score to each chromosome based on how well it performed in the tasks. After all the tasks are complete, you step the trainer and that's where the magic begins. The trainer will internally sort all the chromosomes based on score, then throw out underperformers and replace them with new variations. These new variations will have a mixture of weights from the previous generation along with random mutations. Some can even be completely randomized. The mixing of weights helps propagate learning to future generations, while the mutation helps us explore new behavior. The process then repeats until we are satisfied with the performance of the top chromosome, which we save off.

|

| The genetic trainer has a set of chromosomes. Chromosomes are assigned to tasks. Game instances execute the tasks and return a score. |

In our platform example, each chromosome is a different network that produces unique output for the platform's torque. To measure a chromosome's performance, we put it through a series of tasks. The task randomizes the ball's start location and simulates X seconds. We score the chromosome based on how long it kept the ball on the platform (or in the case of the real Unity example, how close the ball was to the center over the duration of the task). It's extremely important that the tasks are fair for each chromosome. So the set of tasks for each chromosome must have the same starting conditions (the ball position) so that we are comparing apples to apples. It's also useful to give a new set of starting positions every generation to promote a generalized good behavior instead of specifically good behavior.

Time is always a factor when training. Genetic training is nice because it can be heavily parallelized. If I have 1000 tasks I need to perform, I could speed through the training with 1000 instances of my game. Here, something like Azure or AWS can be of great use, but you can go far with a single multi-core machine. One last tip with genetic training: if you have a choice between a lot of chromosomes assigned a small number of tasks or a small number of chromosomes assigned a large number of tasks, prefer the former. The idea is that the more variations we can produce, the faster and better our results.

And that's pretty much it for this article. To recap, we touched on what neural networks are and why they should be used in games. We developed a network model for an example scenario and talked about how to go about training it. And after training, we are left with a graph with a specific set of weights that is able to handle any input we give it.

SmartEngine is available at www.smartengine.ai. It's completely free for Indie developers to use for Windows / Linux / Mac and has special integration for Unity 3D and Unreal. I encourage you to check it out and play around with the example projects.

There's much to discuss still, but it will have to wait until next time. Take care and leave your thoughts in the comments below!

Comments

Post a Comment